Abstract

General physical scene understanding requires more than simply localizing and recognizing objects -- it requires knowledge that objects can have different latent properties (e.g., mass or elasticity), and that those properties affect the outcome of physical events. While there has been great progress in physical and video prediction models in recent years, benchmarks to test their performance typically do not require an understanding that objects have individual physical properties, or at best test only those properties that are directly observable (e.g., size or color).

This work proposes a novel dataset and benchmark, termed Physion++, that rigorously evaluates visual physical prediction in artificial systems under circumstances where those predictions rely on accurate estimates of the latent physical properties of objects in the scene. Specifically, we test scenarios where accurate prediction relies on estimates of properties such as mass, friction, elasticity, and deformability, and where the values of those properties can only be inferred by observing how objects move and interact with other objects or fluids.

We evaluate the performance of a number of state-of-the-art prediction models that span a variety of levels of learning vs. built-in knowledge, and compare that performance to a set of human predictions. We find that models that have been trained using standard regimes and datasets do not spontaneously learn to make inferences about latent properties, but also that models that encode objectness and physical states tend to make better predictions. However, there is still a huge gap between all models and human performance, and all models' predictions correlate poorly with those made by humans, suggesting that no state-of-the-art model is learning to make physical predictions in a human-like way. These results show that current deep learning models that succeed in some settings nevertheless fail to achieve human-level physical prediction in other cases, especially those where latent property inference is required.

Dataset

Mechanical properties and scenarios

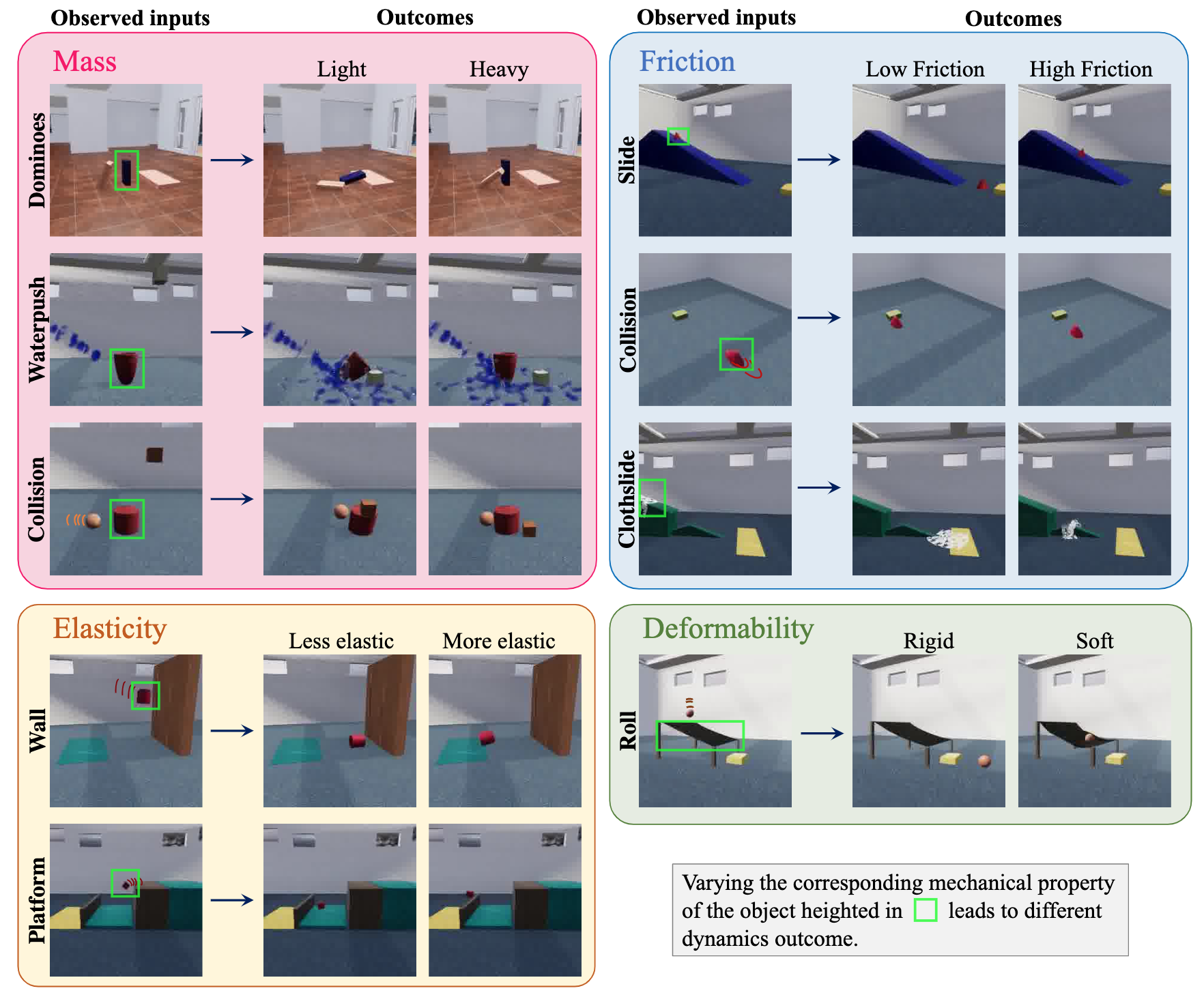

Different mechanical properties can lead to different physical outcomes. In this work, we design Physion++ consisting of 9 scenarios (Mass-Dominoes/Waterpush/Collision, Elasticity-Wall/Platform, Friction-Slide/Collision/Clothslide, and Deform-Roll) that try to probe visual physical prediction relevant to inferring four commonly seen mechanical properties -- mass, friction, elasticity, and deformability.

Design

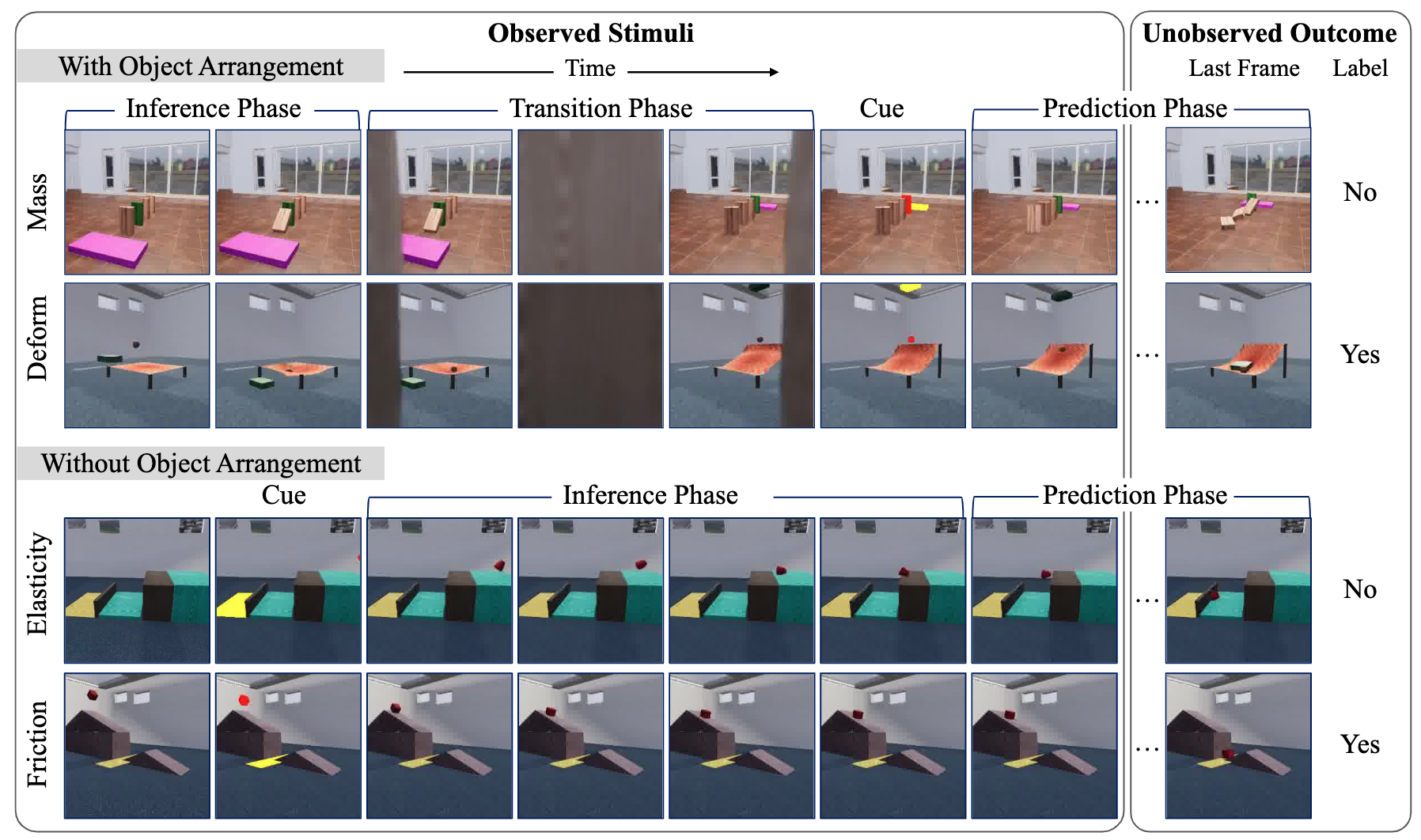

Stimuli are in the format of single videos. The videos consist of an inference period where artificial systems can identify objects' mechanical properties and a prediction period where the model needs to predict whether two specified objects will hit each other after the video ends.

Examples

Mass-Dominoes

Mass-Waterpush

Mass-Collision

Deformability-Roll

Elasticity-Wall

Elasticity-Platform

Friction-Slide

Friction-Collision

Friction-Clothslide

Dataset links:

https://physion-v2.s3.amazonaws.com/train_data.zip

https://physion-v2.s3.amazonaws.com/readout_data.zip

https://physion-v2.s3.amazonaws.com/test_data.zip

https://physion-v2.s3.amazonaws.com/human_data.zip

Analysis of trials that humans (left) or DPI-Net (right) outperform the other

Human outperforms DPI-Net DPI-Net outperforms humans

Evaluations and benchmarks

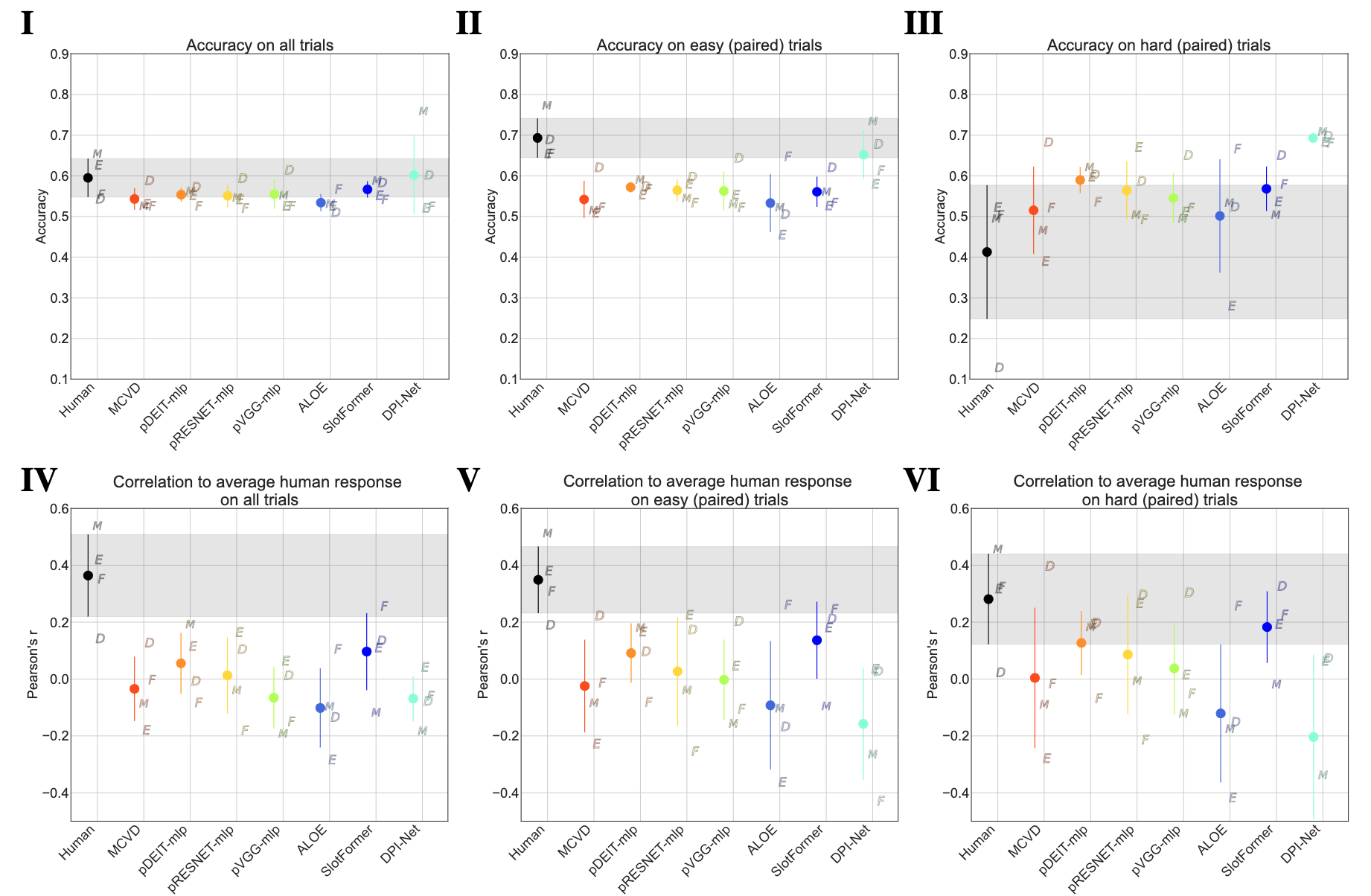

Comparisons between humans and models. First row: task accuracy, second row: Pearson correlation between model output and average human response. We evaluate the metric in three settings: with the whole testing dataset (all trials), with trials (in pairs) that humans perform particularly well (above 67% accuracy; easy trials) and poorly (below 33% accuracy; hard trials). We use the letters "D, F, M, E" to denote the task deformability, friction, mass, and elasticity, respectively.

BibTeX

@article{tung2023physion++,

author = {Tung, Fish and Ding, Mingyu and Chen, Zhenfang and Bear, Daniel M. and Gan, Chuang and Tenenbaum, Joshua B. and Yamins, Daniel L. K. and Fan, Judith and Smith, Kevin A.},

title = {Physion++: Evaluating Physical Scene Understanding that Requires Online Inference of Different Physical Properties},

journal = {arXiv},

year = {2023},

}